🎉

We've raised a $25M Series A. Read more

AI Language Models: The Case for Cultivating Context

Human beings’ unique relationship with language makes training AI language models an uphill battle. Language separates us from our primate cousins. As noted by Mark Pagel in a recent BMC Biology paper, “It is unlikely that any other species, including our close genetic cousins the Neanderthals, ever had language.” However, language alone isn’t our only differentiator as a species. Humans also possess the “urge to connect.” That is, the drive to exchange thoughts and ideas with others.

These two traits have created language that is both versatile and valuable, but eternally vexing to engineers and developers looking to bridge the gap between technology and the human condition.

Artificial intelligence (AI) is one recent effort to imbue programs with human-like qualities. It’s a long road to artificial general intelligence, or general AI, which could mimic humans’ general cognitive abilities. However, we are making inroads with specific AI use cases that could change how we interact with the world and with each other.

AI language models, also called large language models (LLMs) are one area of AI undergoing rapid evolution. In this piece, we’ll explore the unique properties of human language, the barriers to effective AI understanding, and how evolving LLMs may pave the way for a new kind of conversation.

AI Language Models: From Primates to Programs

Studies have shown that primates can recognize and respond to specific language cues — one gorilla even had a “vocabulary” of over 2,000 words! Programs are also capable of parsing language. For example, first-generation chatbots could pick out key words and phrases to help users find specific content or refer them to support.

Humans, however, go beyond these basics thanks to a key concept: context.

Consider a conversation between two friends. The first asks how the second is doing, and the second replies “fine”. The word is easy to parse: Merriam-Webster defines it as “well or healthy; not sick or injured.” Despite the obvious meaning, however, the first friend replies “I’m here if you need to talk.”

What happened? Put simply, the first friend both heard the word and saw the connecting context. Common contextual considerations include tone of voice, facial expressions, volume, and body language. By aggregating this data, humans are capable of understanding both the surface and subtextual meanings of language. This isn’t to say we’re perfect — our lives are full of misread situations and misunderstandings because context wasn’t clear. However, while it may not always be 100% accurate, the ability to capture context is why even the smartest primates can’t quite reach human intelligence levels.

It is also why language analysis technologies have historically struggled to move beyond basic questions-and-answers. However, AI language models have made remarkable advances in recent years.

Can AI Language Models Break Barriers?

Along with context, human language is also unique because it’s compositional. This property allows humans to express their thoughts in sentences that recognize multiple tenses and use a combination of subjects, objects, and verbs. With just 25 subjects, 25 objects, and 25 verbs, it’s possible to create more than 15,000 sentences.

The compositional nature of human language also makes it possible to create strings of seemingly nonsense words that actually make sense in context. Consider the (in)famous buffalo sentence below:

Buffalo buffalo Buffalo buffalo buffalo buffalo Buffalo buffalo.

While this looks like gibberish, it’s grammatically correct. It lacks punctuation and articles such as “which” or “that”, but it is possible to understand. Here’s how.

The three blue Buffalos represent the city of Buffalo.

The three pink buffalo refer to groups of the animal, while the two green buffalo represent the verb buffalo, which means to intimidate or confuse. Putting that all together, the meaning of the sentence becomes:

A group of buffalo, from Buffalo, that are intimidated by a group of buffalo from Buffalo, also intimate a group of buffalo from Buffalo.

Sure, it’s not an easy read, but with the ability to parse compositional language, it does make sense. While most human sentences aren’t quite so convoluted, we naturally assume that listeners have the ability to combine the obvious meaning of our words with both contextual and compositional clues.

The result is what we’ve seen for years around the development of AI language models: they do well when sentences are simple and straightforward, but struggle when meanings aren’t clear.

Emerging AI language models, however, are changing the game.

The Basics of LLMs

AI language models aren’t new. As artificial intelligence moved from potential to possible, researchers quickly realized the potential of AI to analyze and understand language. Historically, however, language models were limited in scope and size.

In addition, these models assigned equal weight to each word in a sentence, meaning they weren’t able to parse any meaning beyond the obvious. This made them ideal for simple, self-service tasks. Take first-generation chatbots. They could point users in the direction of specific resources or connect them to customer service agents, but lacked the ability to dive any deeper.

What’s the difference between AI language models and chatbots? The former are far more advanced.

Large language models change the game. These models utilize deep learning architectures called transformer networks and models. Models are neural networks that learn how to read context and meaning in sequential data, including data found in human language.

Unlike their smaller-scale progenitors, large-scale models have access to much larger datasets. These datasets allow LLMs to provide more accurate and more contextually-relevant answers thanks to two key transformer network innovations: positional encoding and self-attention.

Positional encoding makes it possible for language models to analyze input data in any order, rather than relying on the sequential input of words. Self-attention, meanwhile, makes it possible for models to assign different weights to different parts of a sentence based on both the current data input and the analysis of all previous language inputs.

Together, positional encoding and self-attention make it possible for LLMs to quickly process large volumes of data and produce more accurate query results.

Artificial Intelligence, Real Impact: Large Language Models in Action

It’s one thing to design a language model capable of improving over time to better understand humans and provide relevant responses, but what does this look like in practice?

Here are five examples of language learning models in action.

Search Results

Search engines such as Google and Bing are already using LLMs to improve search results. By applying large language models to understand the context of user queries, search engines can provide more accurate, relevant hits.

Content Generation

Tools like ChatGPT and Dall-E are now using AI language models to generate new content based on user criteria. For example, staff might ask ChatGPT to provide subject lines for marketing emails, or offer ideas for a new blog post. Dall-E, meanwhile, can create AI-generated art in the style of one (or many) historic artists.

Customer Service

From a customer service standpoint, chatbots are the most common application of large language models. With access to large enough datasets — both internally and externally — chatbots and digital workers are now capable of understanding user intention and basic emotion to provide improved customer service.

Consider a frustrated customer connected with a service chatbot. Based on the type of language used and the way sentences are constructed, the chatbot can recognize the need for speedy resolution and connect the customer with the ideal service agent. Artisan AI Sales Reps go even deeper. These digital employees act very much like a human worker and can respond to user inquiries, communicate with your team, and much more.

Language Translation

LLMs are also being used in language translation. Given their ability to pull meaning and context from user-generated input, AI-driven models are ideally suited to ensuring translations aren’t simply direct transformations of words and phrases. Instead, LLMs accurately identify and carry over the subtleties and meaning from input language to output content.

Document Analysis

For industries such as finance, law, and healthcare, LLMs can be used to identify key themes, pinpoint repeated themes or ideas, or discover issues with reporting and documentation that must be addressed before reports are filed or businesses take action.

What’s Next?

The nature of AI language models means they’re always improving. More data means more context, and a greater ability to deliver accurate results.

But what comes next? As noted by Forbes, one significant change already underway is the explosive growth of generative AI tools, such as ChatGPT. This natural language processing tool (NLP) makes it possible for humans to have human-like conversations with AI. Initially, these tools learned using collected data from all previous queries. Newer versions of ChatGPT are capable of searching the Internet at large for data to create effective replies.

Despite advancements, however, AI tools are not yet perfect. While they can produce human-like responses, these responses may not be accurate. This is because they’re designed to generate answers that are plausible and easy to parse — but aren’t necessarily factual. This creates another opportunity for AI growth: knowledge retrieval. Here, LLMs are provided with access to external knowledge sources and use these sources to ensure answers are factual and accurate in addition to being easy on the eyes.

There’s also a growing demand for digital workers capable of automating tasks in a specific role. For example, digital workers might act as sales representatives, marketers, designers, or recruiters. Using advanced AI, these workers are capable of carrying out all the tasks associated with their role, and can also interact directly with human workers. In effect, they act as full-fledged members of business teams, albeit with lower costs and improved performance.

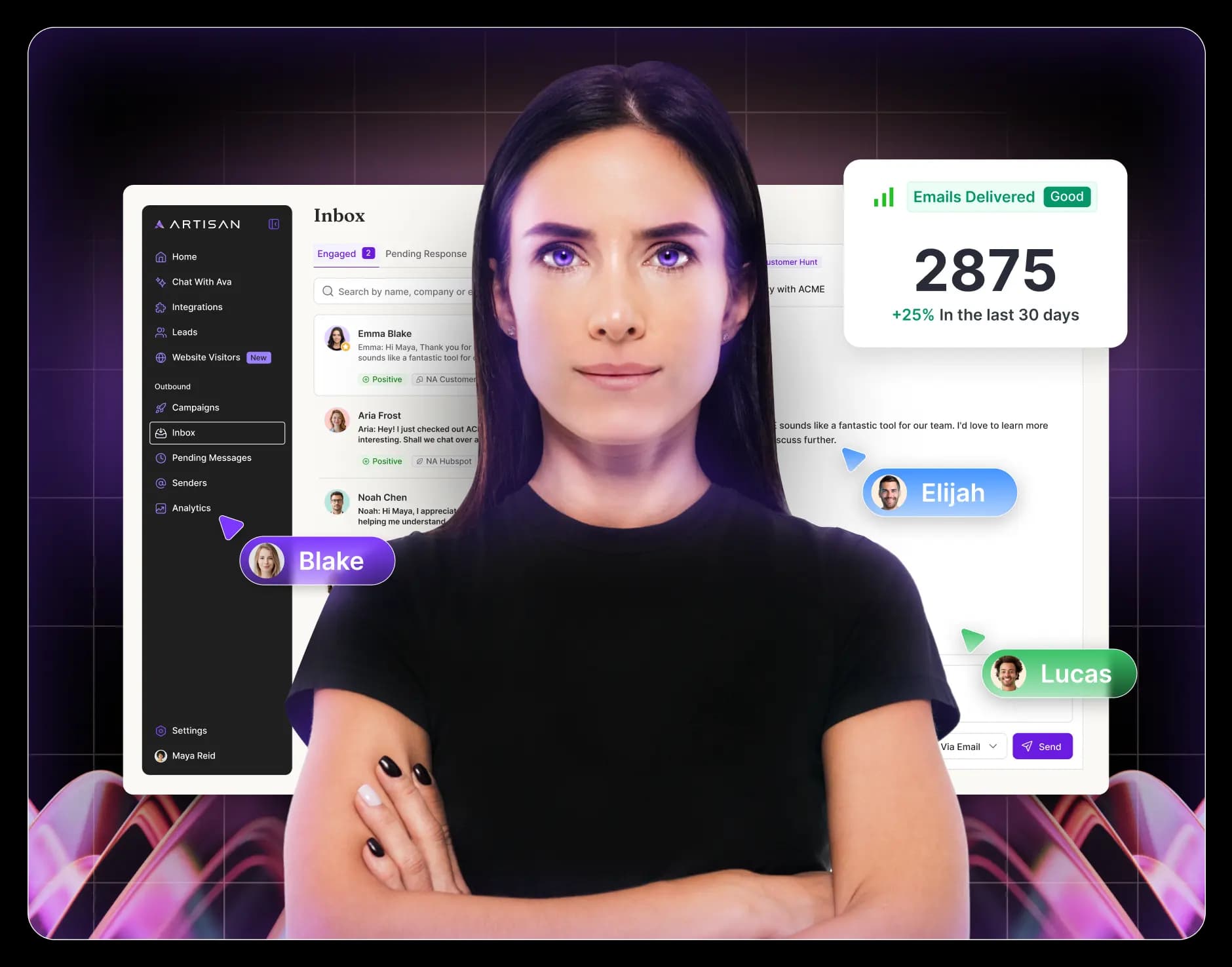

This includes Artisan AI’s sales rep, Ava. Ava is a fully automated outbound sales rep capable of doing everything from booking meetings to sending emails to responding to customer inquiries. Also, much like a real worker, Ava learns over time. Her built-in cutting-edge analytics help her self-improve and optimize her performance.

It’s also worth noting that as AI language models evolve, so too will the role of human workers. While there’s a growing fear of AI replacing human staff, the far more likely scenario is that roles will simply shift. While AI might handle the heavy lifting of drafting a marketing email or creating a web design, humans in the loop remain essential to review and revise ideas before they go live.

The Bottom Line: AI Language Models and Language 2.0

While AI will never be quite “human”, it’s inching closer and closer to the mark. AI language models are a key step in this progression — the ability to understand content and context and improve this understanding over time sets the stage for new iterations of AI capable of answering more questions, delivering more accurate results, and streamlining key business processes.

Author:

Jaspar Carmichael-Jack

You might also like

Ready to Hire Ava and Supercharge Your Team?

Ava is equipped with the best-in-class outbound tools to automate your outbound, freeing your reps’ time to focus on closing deals.